Advancing AFEELA’s AI: Overcoming Bottlenecks in the Learning Cycle

In autonomous driving, perception is key. What a vehicle can see, hear, and understand determines how safely and intelligently it navigates the world. Building on our previous post about how AFEELA’s AI evolves from perception to reasoning, this article explores how we’re tackling two key challenges: improving learning efficiency and advancing multi-task learning in AFEELA’s intelligent driving systems.

Cracking the Code of Learning Efficiency

Faced with enormous streams of data, we used detailed profiling and optimization to reduce GPU idle time — achieving 3× higher GPU utilization.

Resolving Gradient Conflicts in Multi-Task Learning

By introducing new training strategies, we mitigated conflicting gradient signals across tasks — allowing multi-task models to outperform single-task baselines while improving learning stability.

Rethinking Map Utilization

With a Transformer-based perception model that fuses SD map data and real-time sensor input, our AI is advancing to drive intelligently without maps — and become even smarter when maps are available.

Cracking the Code of Learning Efficiency

Training an AI that interprets massive, multi-source datasets isn’t just about designing smarter algorithms. It’s about making learning faster, leaner, and more efficient to enable real-time AI reasoning for real-world driving.

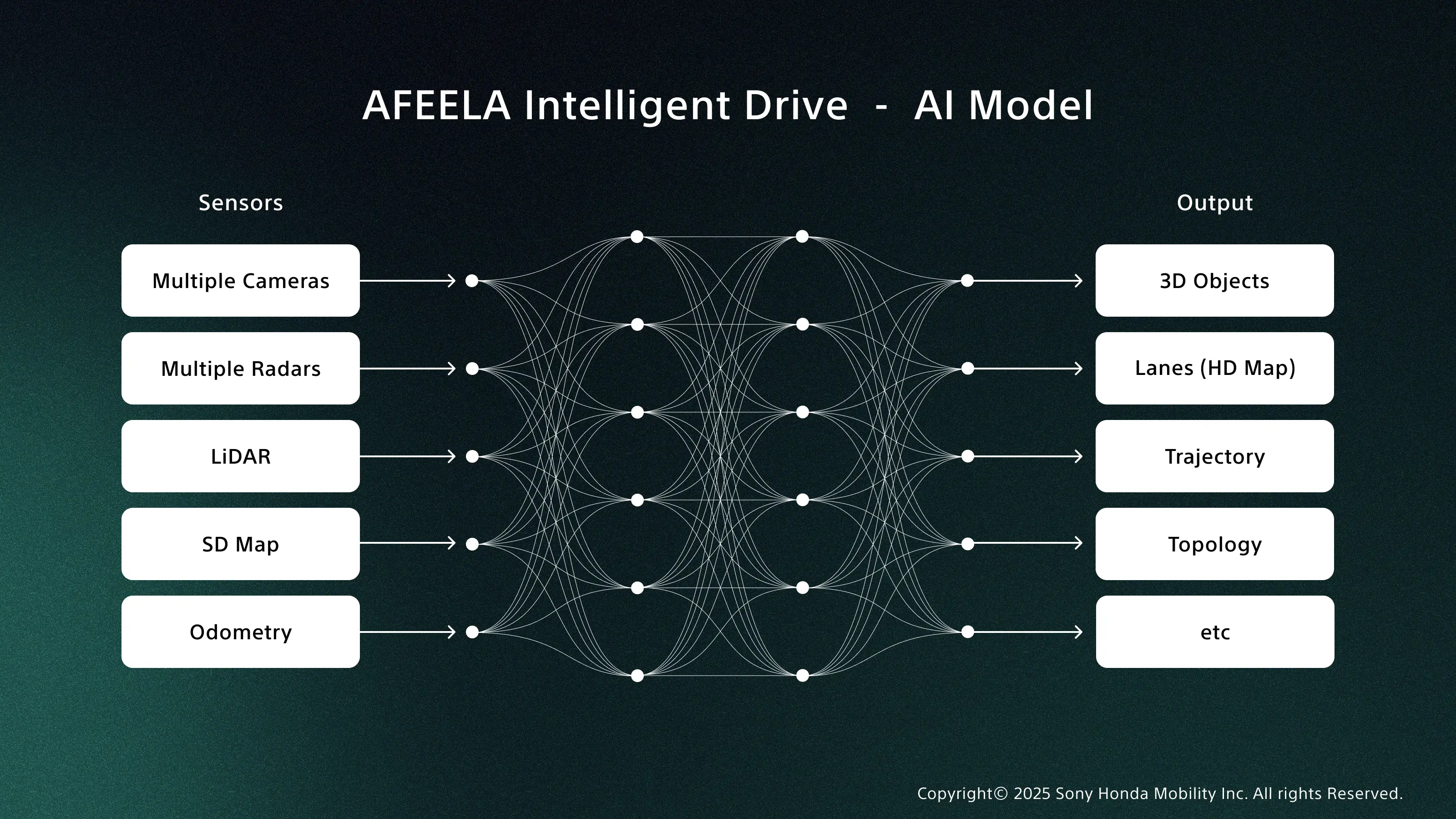

AFEELA’s AI model processes enormous volumes of input from high-resolution sensors, including LiDAR and multiple cameras and radars, resulting in large intermediate memory loads and bottlenecks in CPU-side operations such as data loading and CPU-GPU memory transfer. These slowdowns can create idle GPU time and limit overall training speed.

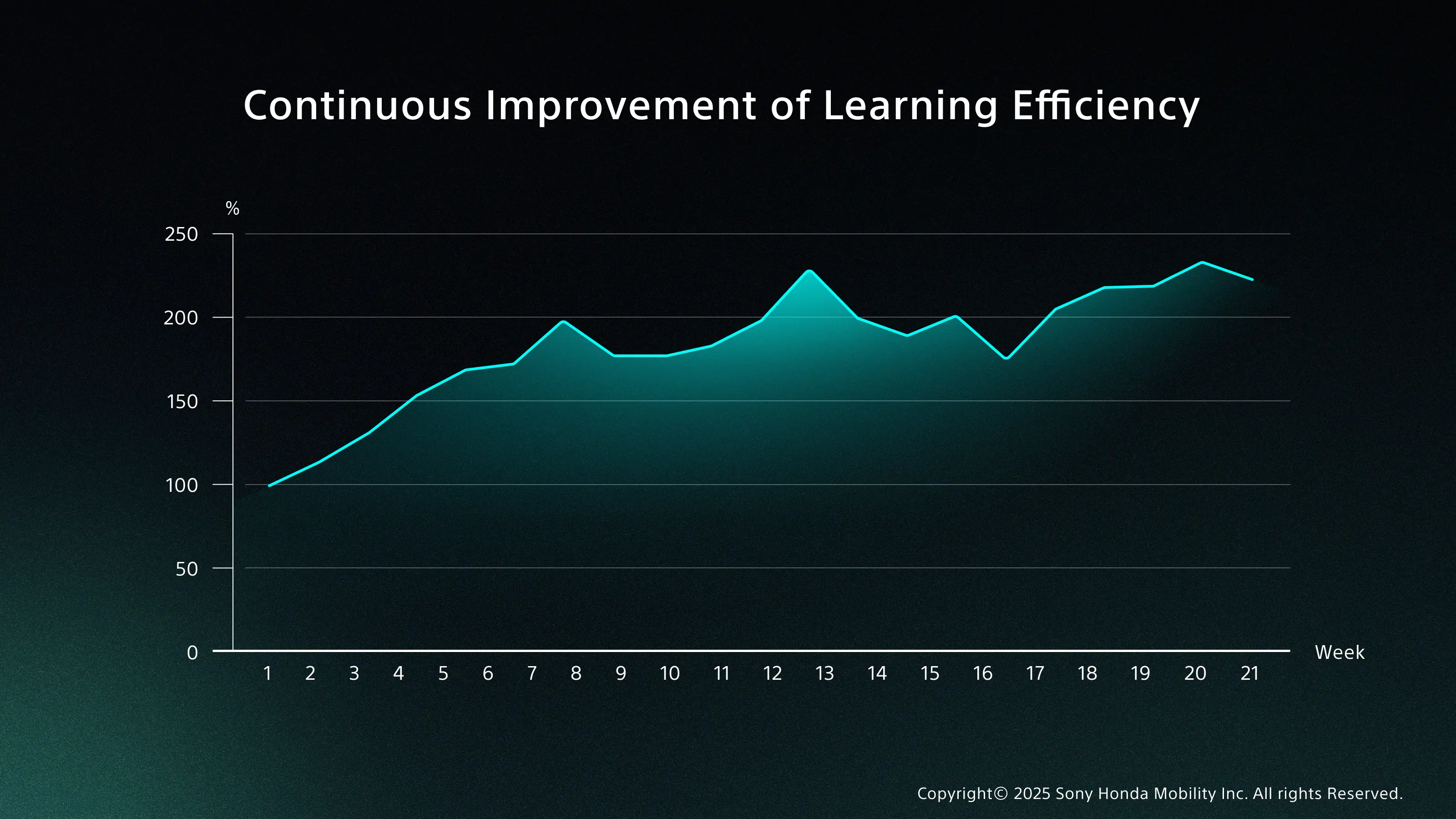

Through detailed profiling, we continuously identify and resolve these bottlenecks across the training pipeline. By accelerating data loader processing, reducing CPU-GPU data transfer times, and refining synchronization, we have significantly reduced GPU idle time – with GPU utilization rates now more than three times higher than during the early stages of development.

Because our AI model evolves almost weekly, each iteration brings new opportunities to refine and optimize performance. Our team embraces this rapid development cycle, continuously improving architecture, algorithms, and processes to accelerate progress and maintain peak efficiency.

Resolving Gradient Conflicts in Multi-Task Learning

As AFEELA’s AI takes on increasingly complex responsibilities, it must learn to perform multiple perception and reasoning tasks simultaneously. Managing how these tasks interact is a key focus in our large-scale AI development, particularly when addressing gradient conflict in multi-task learning, where competing tasks send the model conflicting learning signals that can reduce accuracy.

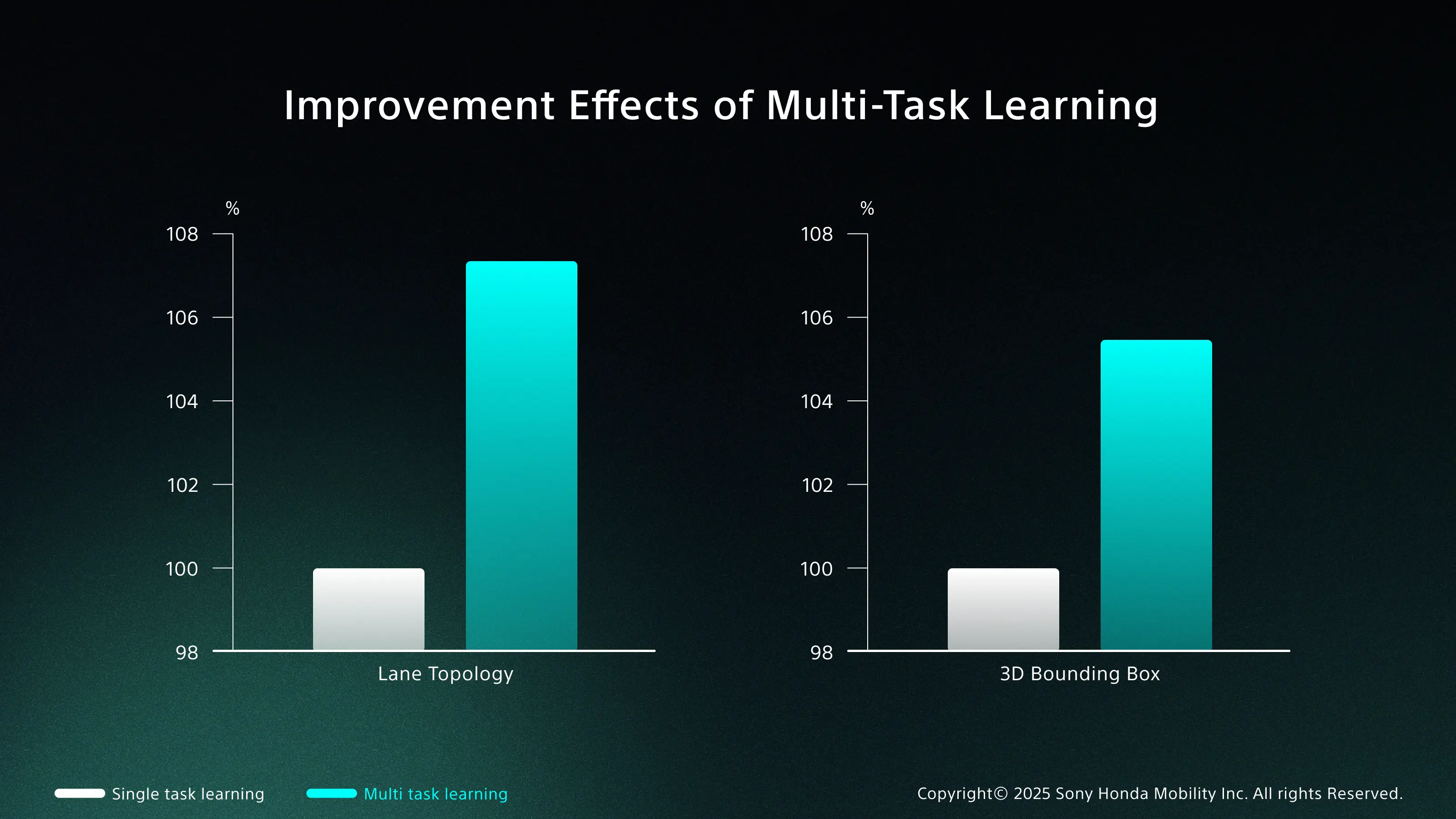

Closely related tasks, as 3D and 2D object detection, often reinforce each other and lift overall performance. However, when tasks with different objectives are trained together, as lane topology and object detection, their respective learning gradients can point in opposite directions. This gradient conflict hinders optimization, reducing learning efficiency and precision.

Besides gradient conflict, differences in loss scaling and inconsistencies in data augmentation can also cause performance to drop compared to single-task training. To address this, we’ve developed training methods that harmonize inter-task relationships. With these refinements, our multi-task models outperform single-task baselines.

To further enhance the learning efficiency of large-scale AI at scale, we are exploring Heterogeneous Computing, selectively assigning computations to processors best suited for them. GPUs, CPUs, and dedicated AI accelerators each have distinct strengths. By coordinating workloads across this mix, we aim to streamline the training pipeline and increase processing speed.

Smarter Navigation

Across the autonomous driving industry, there is a growing shift towards systems that operate without relying on high-definition (HD) maps.* AFEELA is advancing in this direction by developing an AI that can drive confidently without a detailed HD map, while still leveraging map information when available to strengthen perception and decision-making.

AFEELA’s approach integrates Standard Definition (SD) maps,* which provide static information such as lane geometry and intersection location, with real-time, high-precision environmental data from the vehicle’s sensor suite. This enables the AI to accurately understand both the vehicle’s position and its surroundings.

Furthermore, by feeding map vector data into a Transformer model, similar to how language or image data is processed, the AI can anticipate conditions beyond direct sight, such as blind corners. This combination of perception and prediction allows for safer and more adaptive driving.

At the core of this development is our philosophy: to utilize all available information to the maximum extent. Whether or not a map is present, AFEELA’s AI continuously combines every reliable signal – sensor data, spatial cues, and contextual patterns – to maintain a comprehensive understanding of the environment.

*HD Map: High Definition Map. A precise 3D map created using LiDAR, containing lane-specific information with centimeter-level accuracy.

*SD Map: Standard Definition Map. A 2D map containing road-unit information with meter-level accuracy.

Our Strategy Redefining Intelligent Mobility

At Sony Honda Mobility, we’re not defined by when we entered the market, but by the vision we’re building for the future of mobility. Our approach to intelligent mobility combines deep expertise in sensing and AI within a vertically integrated development model – linking hardware, software, and data into an evolving ecosystem.

As introduced in our previous post, our top priority is AI Reasoning – developing intelligence that goes beyond recognition to understand context, intent, and relationships. We’re now considering advancing this capability through the integration of language models, enabling the system to anticipate complex human and environmental behaviors, such as predicting a pedestrian’s next move at an intersection.

Our development concept is simple: maintain an extremely fast cycle. We continuously move through identification, improvement, implementation, and evaluation, refining architecture, algorithms, and parameters on a weekly basis. This rhythm of iteration allows us to integrate new technologies rapidly, optimize performance, and sustain a pace of innovation that meets the demands of real-world intelligence.

Rather than competing on speed of entry, we compete on depth, on the precision of our sensors, the sophistication of our software, and the intelligence that connects them. Through this approach, AFEELA embodies what we believe is the next evolution of mobility: a seamless fusion of engineering excellence and adaptive intelligence designed to learn, reason, and evolve alongside the world it moves through.

The statements and information contained here are based on development stage data.

Related