Moving Beyond Perception: How AFEELA’s AI is Learning to Understand Relationships

Welcome to the Sony Honda Mobility Tech Blog, where our engineers share insights into the research, development, and technology shaping the future of intelligent mobility. As a new mobility tech company, our mission is to pioneer innovation that redefines mobility as a living, connected experience. Through this blog, we will take you behind the scenes of our brand, AFEELA, and the innovations driving its development.

In our first post, we will introduce the AI model powering AFEELA Intelligent Drive, AFEELA’s unique Advanced Driver-Assistance System (ADAS), and explore how it’s designed to move beyond perception towards contextual reasoning.

From ‘Perception’ to ‘Reasoning’

I am Yasuhiro Suto, Senior Manager of AI Model Development in the Autonomous System Development Division at Sony Honda Mobility (SHM). As we prepare for deliveries in the US for AFEELA 1 in 2026, we are aiming to develop a world-class AI model, built entirely in-house, to power our ADAS.

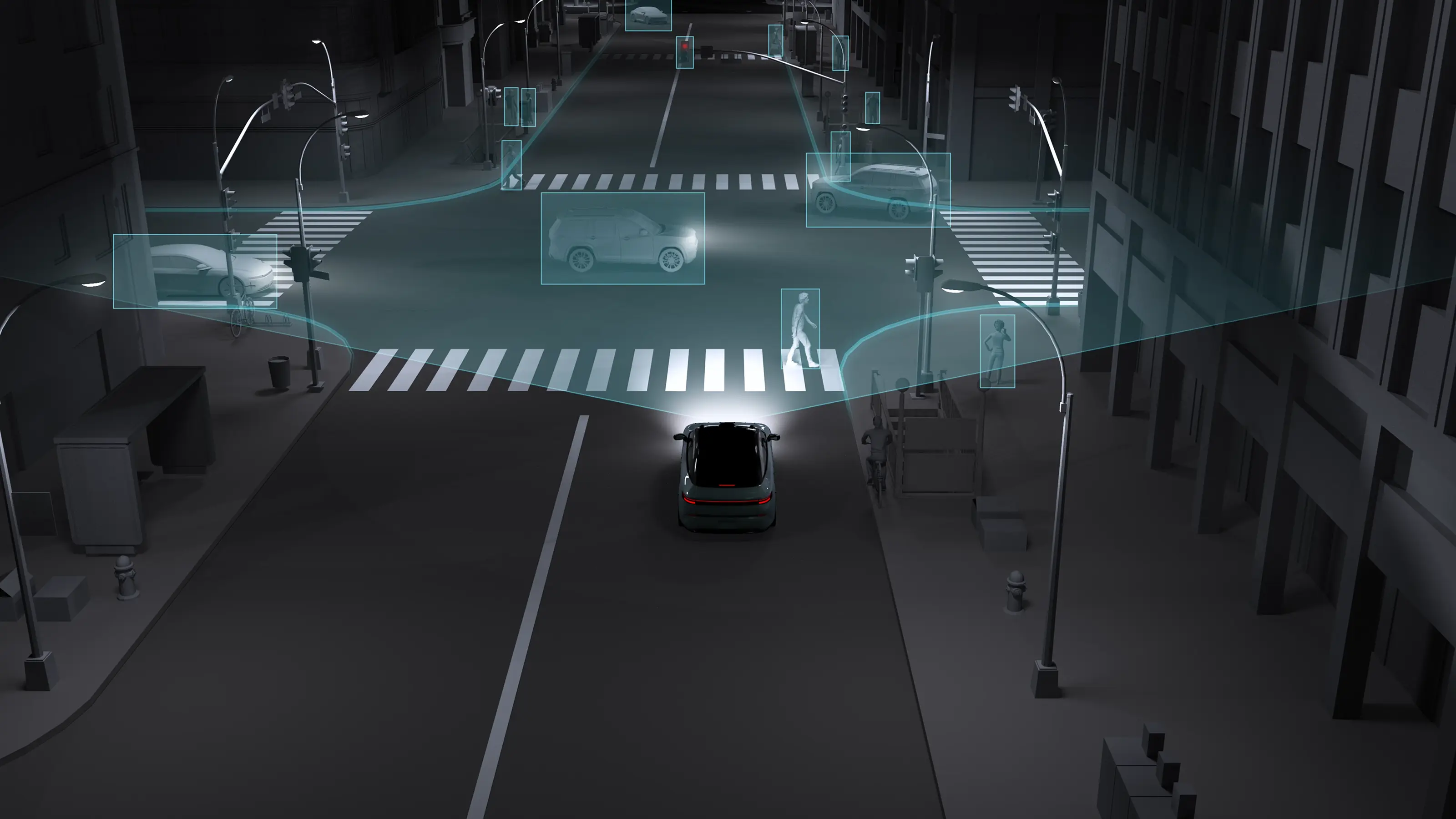

Our goal extends beyond conventional object detection. We aim to build an AI that understands relationships and context, interpreting how objects interact and what those relations mean for real-world driving decisions. To achieve this, we integrate information from diverse sensors—including cameras, LiDAR, radar, SD maps, and odometry— into a cohesive system. Together, they enable what we call “Understanding AI” an intelligence capable not just of recognizing what’s in view, but contextually reasoning about what it all means together.

Precision from Above: AFEELA’s LiDAR with Sony SPAD Sensors

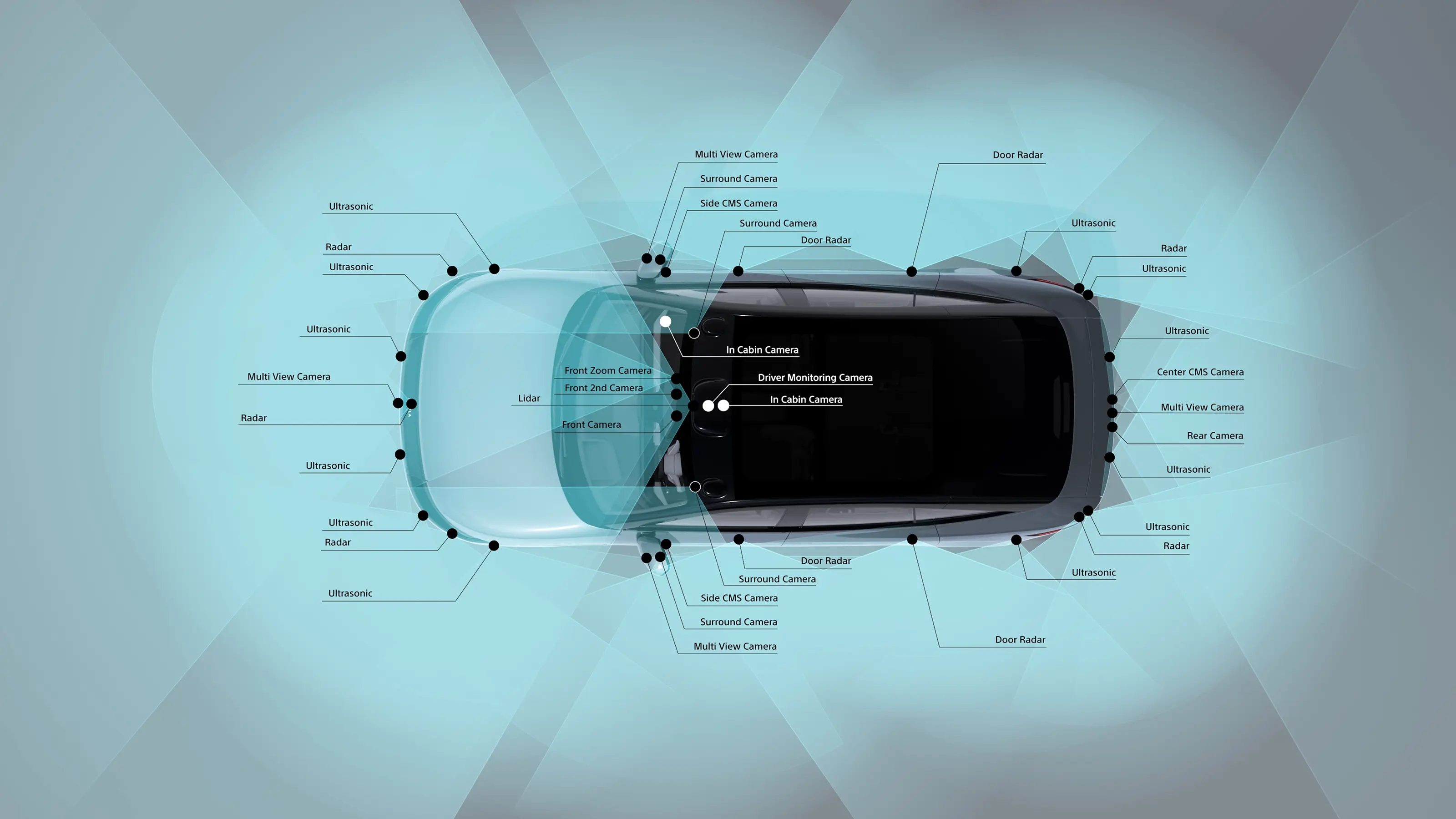

Achieving robust awareness requires more than a single sensor. AFEELA’s ADAS uses a multi-sensor fusion approach, integrating cameras, radar and LiDAR to deliver high-precision and reliable perception in diverse driving conditions.

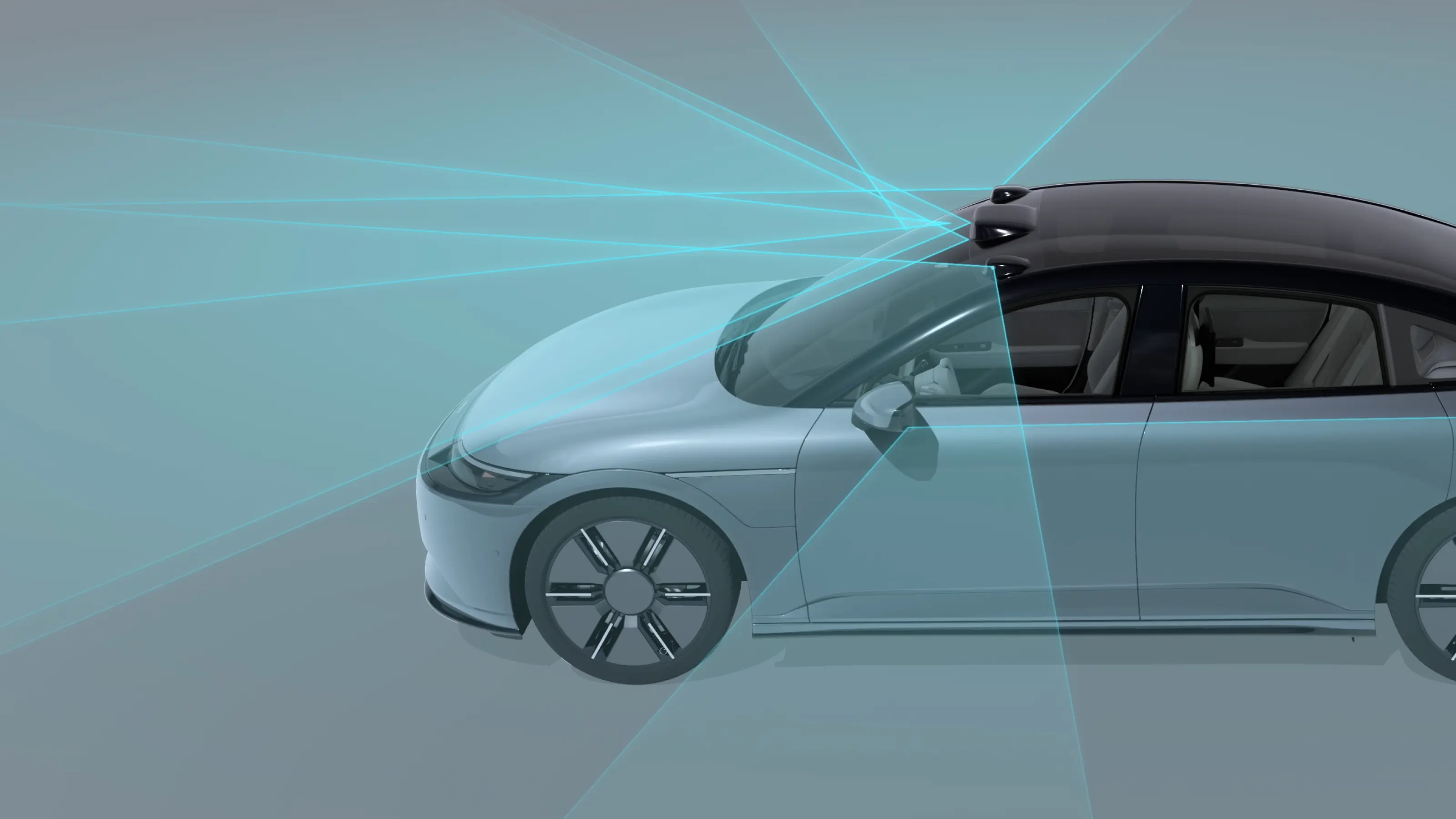

A key component of this approach is LiDAR, which uses lasers to precisely measure object distance and the shape of surrounding objects with exceptional accuracy. AFEELA is equipped with a LiDAR unit featuring a Sony-developed Single Photon Avalanche Diode (SPAD) as its light-receiving element. This Time-of-Flight (ToF) LiDAR captures high-density 3D point cloud data up to 20 Hz, enhancing the resolution and fidelity of mapping.

LiDAR significantly boosts the performance of our perception AI. In our testing, SPAD-based LiDAR improved object recognition accuracy, especially in low-light environments and at long ranges. In addition, by analyzing reflection intensity data, we are able to enhance the AI’s ability to detect lane markings and distinguish pedestrians from vehicles with greater precision.

We also challenged conventional wisdom when determining sensor placement. While many vehicles embed LiDAR in the bumper or B-pillars to preserve exterior design, we chose to mount LiDAR and cameras on the rooftop. This position provides a wider, unobstructed field of view and minimizes blind spots caused by the vehicle body. This decision reflects more than a technical preference, it represents our engineering-first philosophy and a company-wide commitment to achieving the highest standard of ADAS performance.

Reasoning Through Topology to Understand Relationships Beyond Recognition

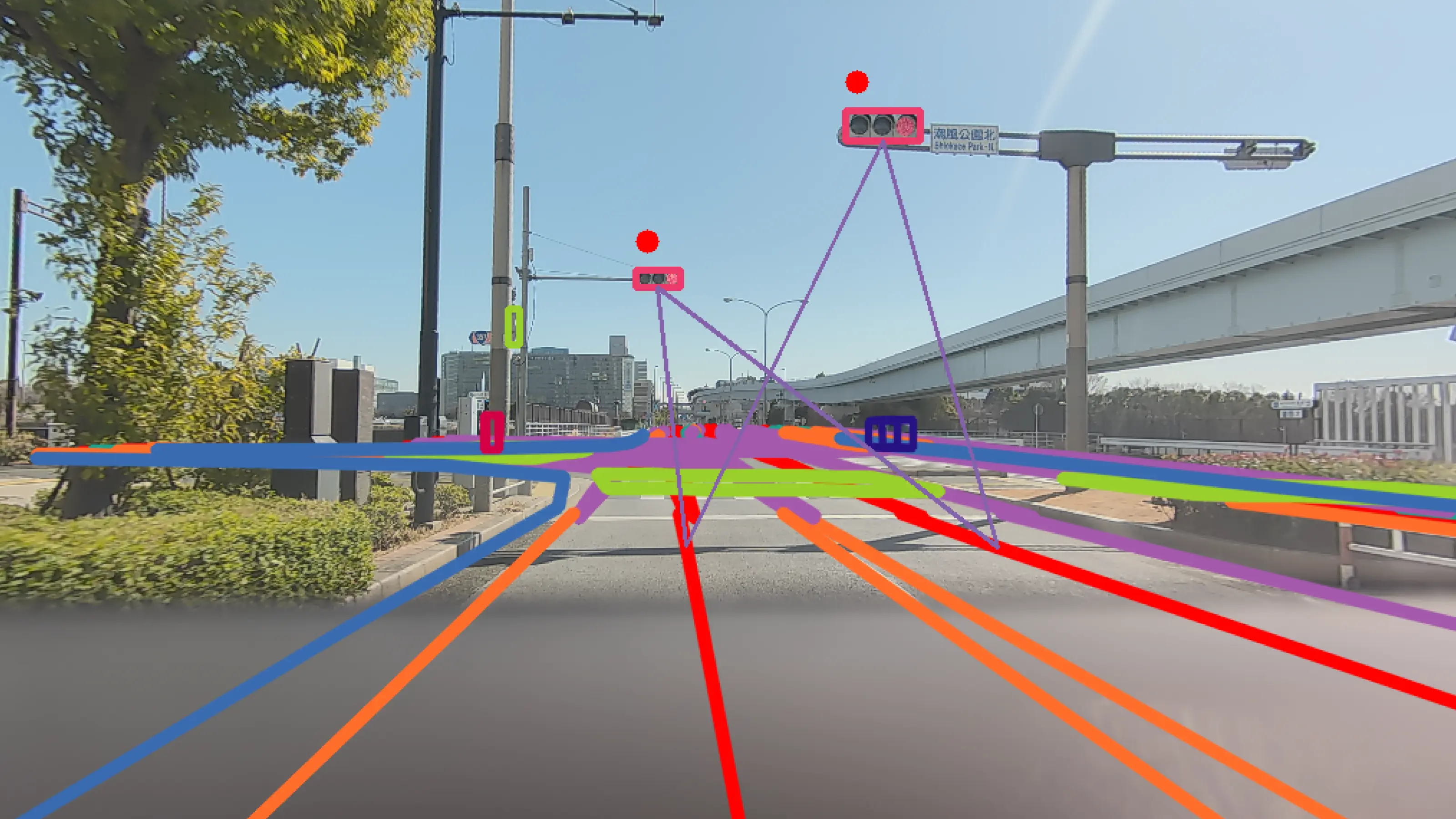

While LiDAR and other sensors capture the physical world in detail, AFEELA’s perception AI goes a step further. It’s true innovation lies in its ability to move beyond object recognition (“What is this?”) to contextual reasoning (“How do these elements relate?”). The shift from Perception to Reasoning is powered by Topology, the structural understanding of how objects in scenes are spatially and logically connected. By modeling these relationships, our AI can interpret not just isolated elements but the context and intent of the environment. For example, in the “Lane Topology” task, the system determines how lanes merge, split, or intersect, and how traffic lights and signs relate to these specific lanes. In essence, it allows the AI to move one step beyond mere recognition to achieve truer situational understanding.

Even when elements on the road are physically far apart, such as a distant traffic light and the vehicle’s current lane, the AI can infer their relationship through contextual reasoning. The key to deriving these relationships is the Transformer architecture. The Transformer’s “attention” mechanism automatically identifies and links the most relevant relationships within complex input data, allowing the AI to learn associations between spatially or semantically connected elements. It can even align information across modalities – as connecting 3D point cloud data from LiDAR and 2D images from cameras—without explicit pre-processing. For example, even though lane information is processed in 3D and traffic light information is processed in 2D, the model can automatically link them. Because the abstraction level of these reasoning tasks is high, maintaining consistency in the training data becomes critically important. At Sony Honda Mobility, we prioritize by designing precise models and labeling guidelines that ensure consistency across datasets, ultimately improving accuracy and reliability. Through this topological reasoning, AFEELA’s AI evolves from merely identifying its surroundings to better understanding the relationships that define the driving environment.

Overcoming the Execution Efficiency Challenge with Transformers

While Transformer models bring powerful reasoning capabilities, deploying them in real-time automotive environments presents major challenges – particularly around execution efficiency. In the early stages of development, our Transformer-based models operated at one-tenth the efficiency of conventional CNNS, raising concerns about their viability for real-time driving assistance. The bottleneck is not in the computational power but memory access. The Transformers strength is its ability to freely link all elements, which requires frequent matrix multiplications across large data sets. This leads to constant memory reads and writes, limiting our ability to fully utilize the SoC’s performance. To solve this, we collaborated closely with Qualcomm to optimize the Transformer. Through architecture refinements and iterative tuning, we achieved a fivefold increase in efficiency compared to our initial baseline, enabling us to run large-scale models in real-time in AEELA’s ADAS system. Although these improvements mark a major step forward, there is still room for our ransformer model efficiency to improve compared to CNN efficiency. Our team continues to explore fundamental solutions to push real-time AI reasoning even further.

Achieving ‘Real-World Usable Intelligence’ Through Multi-Modal Integration

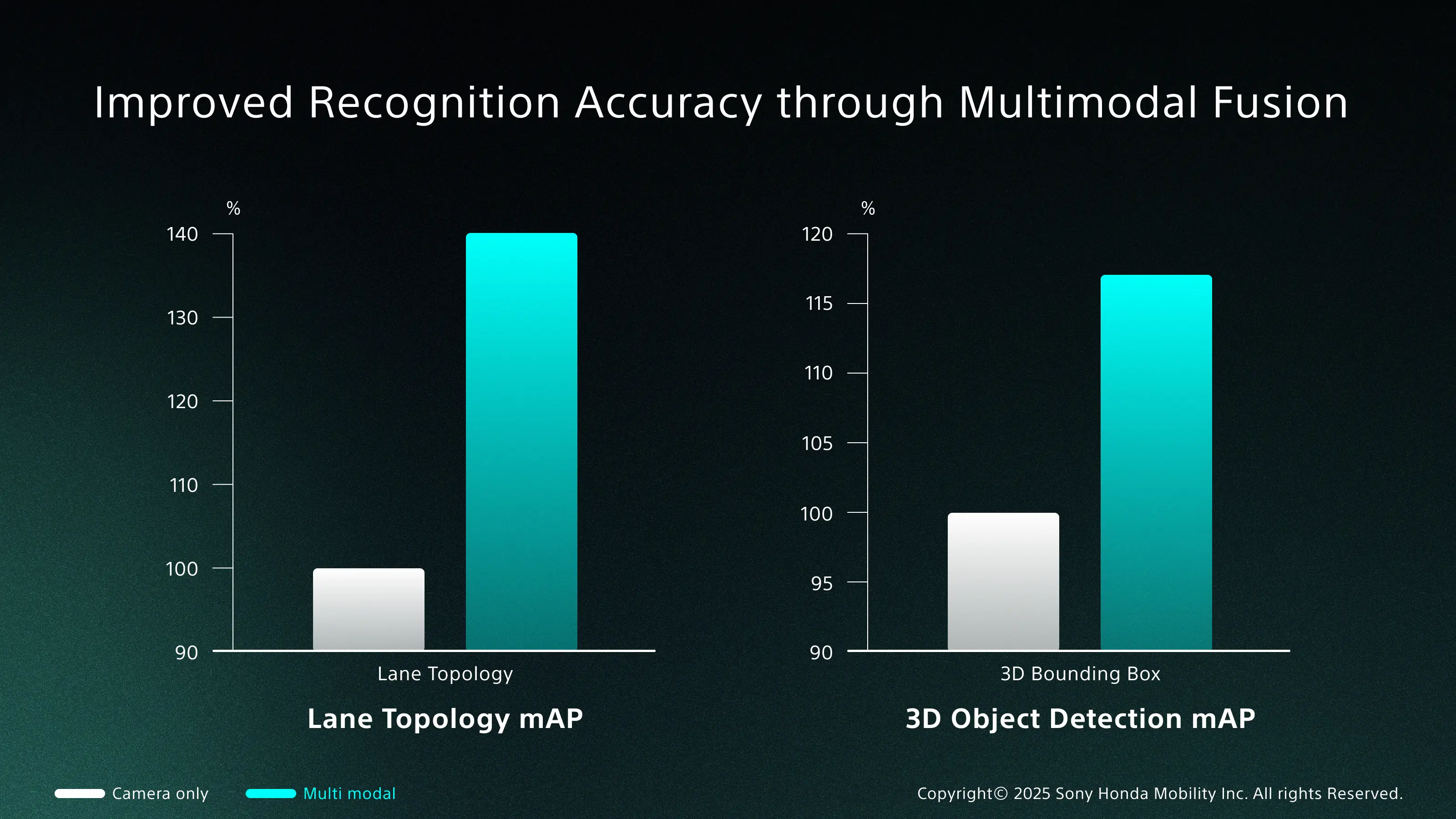

Driving environments can be full of unexpected variables. Weather, lighting, and road conditions can change in an instant, demanding an Intelligence Drive that can adapt across scenarios. To meet this challenge, AFEELA’s AI integrates insights from multiple data sources, combining the strengths of LiDAR, radar, and SD maps into a unified reasoning model. This multi-modal integration allows the system to cross-verify information and enables more accurate and stable recognition of the environment. By linking perception with topological reasoning and context-aware decision-making, AFEELA moves closer to intelligence that’s not only advanced in theory but reliable in practice. AI development for AFEELA is a field where world-leading AI research meets real-world automotive engineering. It is a valuable environment for us engineers to be involved in the challenge of helping AI truly understand the real world.

In the next post, we’ll explore how we’re improving learning efficiency.

The statements and information contained here are based on development stage data.

Related